Libb Thims

(1972-)

Libb Thims is an American electrochemical engineer who is building the extraordinary web-based

Encyclopedia of Human Thermodynamics at

EoHT.info. This valuable knowledge base on the work of hundreds of scientists, engineers, and philosophers he calls the "Hmolpedia" (a human molecule encyclopedia).

He is a prolific writer and has published several books exploring his hypothesis that chemical thermodynamics can be used to explain many aspects of human life. His two-volume

Human Chemistry explores the relationship between chemical bonding and sexual bonding, a scientific look at the popular idea that "love is a chemical reaction."

His slim and highly readable 2008 volume

The Human Molecule contains a valuable history of the idea that a human being can be

reduced to its chemical contents. He tells us of many great thinkers who explicitly describe humans as molecules, including

Hippolyte Taine,

Vilfredo Pareto,

Henry Adams,

Teilhard de Chardin, and

Charles Galton Darwin.

In 2010

Anthony Cashmore, a plant biologist and member of the National Academy of Sciences, described animals, including human beings, as a "bag of chemicals" entirely determined by the laws of physics and chemistry. He says, "we live in an era when few biologists would question the idea that biological systems are totally based on the laws of physics and chemistry." Like Thims, Cashmore sees the biological basis of human behavior as chemistry.

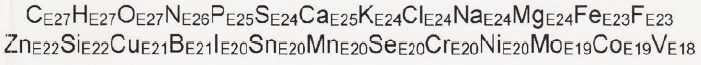

Back in 2002, Thims began using tables of the amounts of various chemical elements in a typical adult human to write the chemical formula for his "human molecule." He came up with this formula with 26 elements. The subscripts are read as e

27, etc.

Thims hopes to describe relationships between humans in terms of chemical thermodynamics, particularly the sexual relation in which a man and woman produce a child. He writes...

AB + CD → A≡C + BD

"where A is the man, C is the woman, B and D are germ cells (sperm and egg, respectively), A≡C is the man and women chemically “bonded” in a relationship or marriage, and BD is the new child or sperm and egg chemically fused."

He says that as of 2006,he was forced

"to write a basic treatise on (a) human chemical reaction theory and (b) human chemical bond theory, as reaction models and bond are basic components in the starting point inf the science of chemical thermodynamics."

"before anyone can even attempt to write a basic book on “human chemical thermodynamics”, as I am attempting now to do (see:

pdf), reaction models and bond models have to be established first, not to mention one has to establish what a human is, from the chemical thermodynamic viewpoint (hence the 2008 Human Molecule booklet)."

Thims is a strong

determinist who denies the existence of

ontological chance. As a consequence, he also denies human

free will. He is an exponent of what he calls "smart atheism." He wrote

this extensive web page on himself.

Trained as a chemical engineer, Thims is greatly bothered by scientists and others who make a connection between thermodynamics and "information theory" beginning with

Claude Shannon, who called his formula for information "entropy" at the suggestion of

John von Neumann, because the formula for

Ludwig Boltzmann's statistical mechanical entropy had the same mathematical form, and both were summations over the probabilities of various states of a system.

Thims and Shannon's "Bandwagon"

Thims says there is nothing in information theory that is thermodynamics. Shannon himself was embarrassed by the many thinkers who jumped on what he called the "bandwagon" of

information theory.

Thims has researched hundreds of examples of writers who assert the lack of connections between classical phenomenological thermodynamics, with laws that are relations between macroscopic variables, pressure, volume, temperature, chemical potentials, etc., and information theory, which is the mathematical theory of communications.

Thims says: "The equations used in [Shannon's] communication theory have

absolutely nothing to do with the equations used in thermodynamics." That is true. Classical chemical thermodynamics HAS NOTHING TO DO with information theory. He is right.

Thims sometimes add that statistical mechanics has

nothing to do with information theory. That is not true.

To make sense of this, we should not be comparing information to Carnot-Clausius classical thermodynamics, which has no concept of

multiple possibilities with different probabilities, and the "logarithm of probabilities" that became entropy in the statistical mechanics of Boltzmann and Gibbs. Statistical mechanics has a LOT TO DO with information theory.

Boltzmann entropy and Shannon entropy have different dimensions (

S = joules/degree,

I = dimensionless "bits"), but they share the "mathematical isomorphism" of a logarithm of probabilities.

Boltzmann entropy:

S = k ∑ pi ln pi. Shannon information:

I = - ∑ pi ln pi.

They both depend on the reality of "chance" and

indeterminism that

Albert Einstein showed is part of quantum mechanics ten years before

Werner Heisenberg's "uncertainty."

Boltzmann entropy and Shannon entropy are both based on ontological

chance, which Albert Einstein discovered 1916. He seriously disliked it, but showed that quantum mechanics could not do without it.

As Thims claims in a lengthy (120-page) article

Thermodynamics ≠ Information Theory,

Sadi Carnot's phenomenological entropy has

nothing to do with Shannon's information communication entropy.

Thermodynamic entropy involves matter and energy, Shannon entropy is entirely mathematical, on one level purely

immaterial information, though information cannot exist without "negative" thermodynamic entropy.

It is true that information is neither matter nor energy, which are conserved constants of nature (the first law of thermodynamics). But information needs matter to be embodied in an "information structure." And it needs ("free") energy to be communicated over Shannon's information channels.

Boltzmann entropy is intrinsically related to "negative entropy." Without pockets of negative entropy in the universe (and out-of-equilibrium free-energy flows), there would no "information structures" anywhere.

Pockets of negative entropy are involved in the

creation of everything interesting in the universe. It is a

cosmic creation process without a creator.

Annotated version of Thims' Thermodynamics ≠ Information Theory

Normal |

Teacher |

Scholar